Imagine a modern factory floor. You don't see workers carrying parts from one station to the next by hand. Instead, you see a sophisticated assembly line, a system of conveyor belts and robotic arms that takes raw materials and turns them into a finished product, all without stopping.

That, in a nutshell, is what data pipeline automation does for your data. It’s the technology that automatically moves raw data from all your different sources, cleans and shapes it into a useful format, and then delivers it for analysis—all without someone needing to manually click "run."

At its heart, data pipeline automation is about creating a self-running, hands-off system for all your data flows.

Think about the old way of doing things. Managing data manually is like a bucket brigade at a fire. An engineer has to grab a "bucket" of data from one system, run it over to another, clean it up by hand, and then finally pass it off for someone else to use. This isn't just slow and mind-numbingly tedious; it's a recipe for disaster. One slip-up, one tiny mistake, can contaminate an entire dataset, leading to bad reports and even worse business decisions.

Data pipeline automation gets rid of the bucket brigade and replaces it with a seamless, programmatic flow. It’s a digital conveyor belt that runs 24/7, making sure data gets from point A to point B efficiently and reliably. This isn't just a small tweak; it’s a completely different way of thinking about how companies manage their most valuable asset. The economic impact speaks for itself—the global market for data pipeline tools was valued at roughly $11.24 billion and is expected to hit $13.68 billion the following year, a healthy growth rate of about 21.8%. You can dig deeper into these trends in this detailed data pipeline tools market analysis.

The difference between a manual and an automated pipeline is night and day. While they both have the same goal—moving data—how they get there and the results they produce are worlds apart. Manual pipelines rely on engineers to write and run scripts for every single step, a method that just can't keep pace with the sheer amount of data businesses deal with today.

On the other hand, an automated pipeline is built from the ground up for reliability and scale. It uses orchestration tools to schedule, run, and monitor entire workflows, making sure every task happens in the right order and on time.

This approach breaks the operational bottleneck. It frees up your team from the constant, repetitive work of just keeping the lights on. Instead, they can focus their brainpower on what really matters, like building new data products or uncovering game-changing insights.

To really see the difference, let’s compare them side-by-side.

This table breaks down the fundamental differences between the two approaches, showing why automation has become the standard for modern data teams.

Ultimately, switching to data pipeline automation isn't just a technical upgrade; it's a strategic one. It gives your organization the ability to make quicker, more confident decisions because you can finally count on a constant stream of clean, reliable, and timely data.

Let's move beyond theory. The practical advantages of data pipeline automation show up directly in your business results. When you automate how data moves through your company, you're not just making a technical tweak—you're making a strategic decision. This leads to cleaner data, more efficient operations, and a real competitive advantage because you can get insights faster than ever before.

This isn't just a fleeting trend; it's a fundamental shift. The global market for data pipeline tools, recently valued at $10.22 billion, is expected to jump to $12.53 billion next year. That kind of growth tells you just how critical automation is becoming for handling today's data deluge. If you want to dive deeper, you can check out these detailed data pipeline tools market insights.

Let’s be honest: human error is one of the biggest risks to data integrity. No matter how careful your team is, manual data entry and processing will always introduce mistakes. A simple typo, a wrong format, or a missed update can snowball into huge problems, leading to bad analysis and even worse decisions.

Data pipeline automation stops these errors before they start. You define the rules and validation checks once, and the system applies them flawlessly every single time.

Ultimately, this consistency builds trust. When your leadership team knows the data is solid, they can make bold decisions with confidence.

Your data engineering team is incredibly valuable. So why is their time often spent on boring, repetitive tasks? Manually running scripts, troubleshooting jobs that failed overnight, and patching together data flows is a massive drain on both resources and morale.

Automating these workflows frees your engineers from the daily grind of firefighting. Instead of propping up fragile manual processes, they can focus their brainpower on what really matters: building new data products, optimizing system performance, and exploring innovative data sources.

This shift directly fuels productivity and creativity. When you empower engineers to solve bigger, more interesting problems, the entire business wins. This efficiency isn't just for general operations, either. It can be applied to specialized fields like environmental reporting. For anyone interested in that space, you can learn more about achieving carbon data automation for efficiency.

In today's market, the faster you get insights, the faster you can act. Manual pipelines create a frustrating lag between when data is created and when it’s actually useful. That delay could mean missing a golden market opportunity or failing to spot a crisis before it gets out of hand.

Automated pipelines completely eliminate this lag. They can run continuously or on a tight schedule, making sure that fresh, reliable data is always on hand for analysis.

Consider this real-world retail example:

Imagine a retail chain trying to analyze daily sales to manage inventory and tweak marketing campaigns.

This speed—from raw data to actionable insight—is what allows organizations to become truly agile and responsive. It’s how data goes from being a chore to being your greatest strategic asset.

To really get what makes data pipeline automation tick, you have to look under the hood. An automated pipeline is a lot like a complex machine, built from several core parts that each have a specific, crucial job. When all these pieces work in sync, they create a self-sufficient system that shuttles data from point A to point B with incredible speed and accuracy.

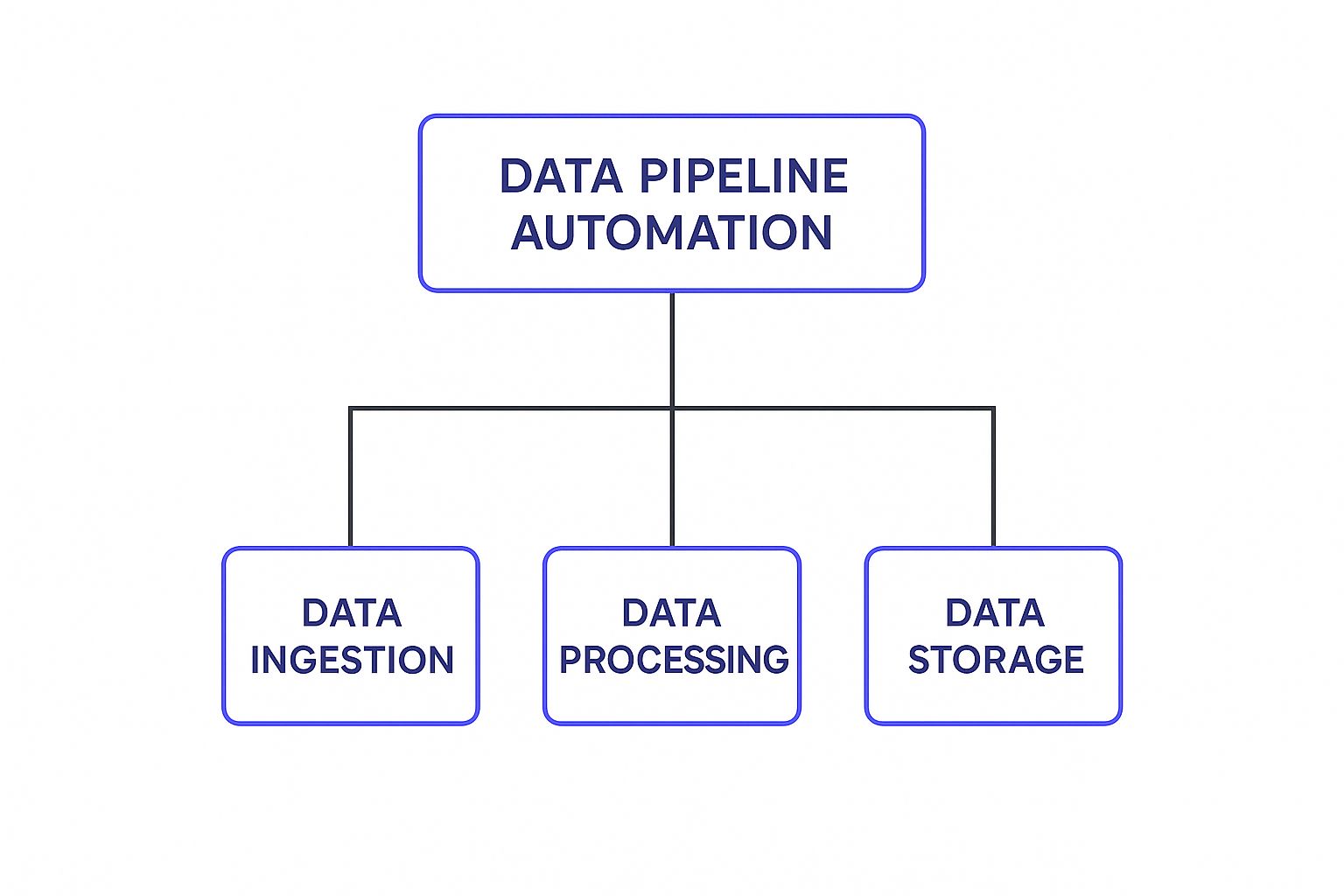

This diagram lays out the fundamental stages of the process.

As you can see, it boils down to three key pillars: getting the data in (Ingestion), making it useful (Processing), and keeping it safe (Storage). Let's dive into each of these, along with a couple of other vital components, to see how it all comes together.

Every data pipeline has a beginning. These starting points, or data sources, are the different places where your raw data is first created and stored. The sheer variety of potential sources is why you need a flexible system that can connect to just about anything.

You’ll typically find data coming from places like:

The big challenge here is that every source speaks its own "language," with unique formats and structures. A good automated pipeline has to act as a universal translator.

Once you’ve pinpointed your sources, the next job is ingestion—the actual process of pulling that data into your pipeline. Think of it as the on-ramp to a data highway. The main goal is to get data out of its siloed system and onto the main road where its journey can start.

There are two main ways to get this done:

Which method you choose really just depends on your business goals and how quickly you need fresh data.

Raw data is almost never ready for analysis right out of the box. It tends to be messy, inconsistent, and unstructured. That’s where transformation steps in. If ingestion is the on-ramp, transformation is the quality control and assembly station where raw materials get turned into a polished, finished product.

This stage is arguably the most important for making sure your data is high-quality and usable. It’s where you apply business logic to make the data not just clean, but meaningful.

During transformation, your pipeline will handle a few key jobs:

YYYY-MM-DD).If you skip this step, you’re just feeding your analytics tools unreliable garbage, which will only lead to bad decisions. To truly see the power of your automated flows, you need to understand the techniques for building custom analytics dashboards.

After the data is cleaned and prepped, it needs a permanent home. This could be a data warehouse like Google BigQuery for structured data ready for analysis, or a data lake for storing massive amounts of both raw and processed data. The right storage choice really hinges on what you plan to do with the data later on.

Of course, none of this happens by magic. The entire show is run by an orchestrator. Tools like Apache Airflow or managed services like AWS Step Functions act as the pipeline's brain or project manager. The orchestrator schedules jobs, manages which tasks depend on others, and retries any steps that fail. It's the component that ensures every part of the process runs in the right order and at the right time, turning a bunch of separate tasks into a single, automated workflow.

With all these moving parts, it's helpful to know what tools people actually use to build these pipelines. Different tools specialize in different stages, from pulling data to managing the workflow.

This table gives you a snapshot of the modern data stack. While some platforms offer all-in-one solutions, many of the best pipelines are built by combining these specialized, best-in-class tools to fit a company's unique needs.

Getting data pipeline automation right is about much more than just picking the newest, shiniest tools. It requires a thoughtful, deliberate strategy. Without one, you're setting yourself up for tangled workflows, surprise costs, and a very frustrated team. It's like building a house—you’d never start pouring concrete without a detailed blueprint.

A strategic approach ensures your automated pipelines don't just function, but actually deliver real business value. The goal is to build a data infrastructure that's resilient, efficient, and secure from the ground up, sidestepping the common pitfalls that trip up so many projects.

Before a single line of code gets written or a new subscription is activated, you have to define what success actually looks like. Your data strategy is that essential blueprint for the entire project. It's where you answer the fundamental questions that will steer every decision down the road.

Start by getting your stakeholders in a room and asking the tough questions:

Answering these up front keeps you from building a technically flawless pipeline that solves the wrong problem. It directly connects your engineering efforts to tangible business goals, guaranteeing the end result is actually useful.

The market for automation tools is crowded, making it easy to get overwhelmed. The trick is to find technology that genuinely fits your organization's unique situation, not just what's trending on social media.

Think through these factors as you evaluate your options:

A classic mistake is to over-engineer the solution. Sometimes, a simple, managed ETL service is a far better choice for a small team than a sprawling, self-hosted orchestration platform. Start with what you need now, but have a clear plan for how you'll grow.

An automated pipeline that runs silently is fantastic—right up until it fails silently. Without great monitoring, a broken pipeline can go unnoticed for hours or even days, polluting your analytics systems with bad data and destroying trust. This is why observability—the ability to understand what’s happening inside your system just by looking at its outputs—is completely non-negotiable.

Your monitoring strategy should cover a few key bases:

This proactive mindset transforms your team from constantly putting out fires to proactively managing a healthy system.

Data governance and security can't be an afterthought. They must be woven into the very fabric of your automation strategy right from the beginning. Pushing them to the end is a recipe for compliance headaches and serious data breaches. While the context is different, the principles of structured, secure automation in a CI/CD pipeline tutorial share a similar DNA.

Focus on establishing clear rules for:

By integrating these practices early, you build a data pipeline automation framework that isn't just powerful and efficient—it's also secure and trustworthy, setting your organization up for real, long-term success.

Building a powerful, automated data pipeline is a fantastic goal, but let's be honest—the path to get there is rarely a straight line. For all the talk about the benefits, the real world is messy. The journey is often littered with frustrating obstacles that can stop even the sharpest data teams in their tracks. Knowing what these hurdles are ahead of time is half the battle.

So, to successfully implement data pipeline automation, you need to be ready for these challenges. Let’s walk through the most common roadblocks I’ve seen and talk about practical ways to get around them, so your pipelines aren't just working, but are built to last.

One of the first big headaches you'll run into is managing complex dependencies. Modern data pipelines aren't simple, one-way streets. They’re more like a tangled web of tasks where one step can't kick off until several others have finished perfectly. If a single upstream task fails, it can create a domino effect, bringing the whole operation grinding to a halt.

Trying to track all these connections by hand is a recipe for disaster, especially as things scale. This is precisely where a good workflow orchestrator becomes your best friend.

By handing off dependency management to a tool designed for the job, you get a system that’s easier to understand, more reliable, and doesn't need constant babysitting.

Schema drift is the silent killer of data pipelines. It’s what happens when your source data's structure changes without any warning—a column gets renamed, a data type flips from a number to a string, or a new field just appears out of nowhere. A pipeline that was hard-coded to expect the old structure will either break immediately or, even worse, start quietly feeding corrupted data into your systems.

The secret to surviving schema drift is building defensive, adaptable pipelines instead of rigid, fragile ones. You have to design your system with the assumption that things will change.

Here are a few ways to protect your pipelines from the unexpected:

As your data volume explodes, checking everything for quality by hand becomes physically impossible. A pipeline that moves data at lightning speed is completely useless if that data is wrong. The only way to guarantee quality in an automated world is to build validation checks directly into the pipeline itself.

This is a fundamental shift from doing occasional spot-checks to a system of continuous, automated verification.

Key Strategies for Automated Quality Checks:

By baking these checks directly into your data pipeline automation, quality stops being a reactive fire drill and becomes a proactive, integrated part of your process.

As you start exploring automated data pipelines, you’re bound to run into a lot of questions. It's a field packed with overlapping terms and a dizzying array of tools, which can feel overwhelming at first. Don't worry, that's completely normal.

To help you get your bearings, we've put together some straightforward answers to the most common questions we hear. Think of this as a quick guide to slice through the jargon, clarify the big ideas, and give you practical advice you can actually use. Let's clear things up so you can move forward with confidence.

It's easy to get these two mixed up since people often use them interchangeably. But they aren't the same, and knowing the difference is crucial for making smart decisions about your data setup.

At its heart, ETL (Extract, Transform, Load) is a specific process for moving data. It’s like a recipe: you pull data from a source, clean it up and reshape it (transform), and then load it into a destination like a data warehouse. It’s a classic, battle-tested pattern, but it's just one way of doing things.

A data pipeline is the much broader infrastructure—it's the whole factory that moves data from point A to point B. A pipeline can run an ETL process, but it can also handle other patterns, like ELT (Extract, Load, Transform) or real-time data streaming.

Data pipeline automation is the technology and strategy that makes the entire factory run on its own, without any manual pushing of buttons. It's the system of conveyor belts and robotic arms that executes the recipe automatically. So while an automated pipeline can run an ETL job, its job is much bigger than that single task.

In short: ETL is a specific job, while an automated data pipeline is the self-operating system that can perform that job and many others.

This is a critical question, and the answer is always: it depends entirely on your situation. There's no single "best" tool, only the best tool for the job you need to do.

Start by thinking through these key factors:

For smaller teams or common use cases, managed cloud services like AWS Glue or Google Cloud Dataflow are fantastic starting points. For complex, massive operations, you might need the power and flexibility of a custom setup using an orchestrator like Apache Airflow paired with a transformation tool like dbt. Always define your needs first—don’t just chase the most popular tool.

Yes, you absolutely can. The recent explosion of no-code and low-code data platforms has made data pipeline automation accessible to a much wider audience, not just developers.

These tools are built for exactly this purpose:

These platforms are perfect for standard tasks, like pulling all your marketing data into a single data warehouse for analysis. It's important to know their limits, though. For highly specific business logic, obscure data sources, or really complex transformations, you'll likely need to bring in some SQL or Python to get the job done right.

Great automation doesn’t just move data faster; it moves better data. Building confidence in your automated reports means building a multi-layered defense against bad data directly into your pipelines.

Ready to stop manually wrangling customer data and start building stronger relationships? Statisfy uses AI-driven automation to turn your raw customer information into proactive, actionable insights. See how our platform can help you streamline operations, predict customer needs, and boost your retention rates.