Ever tried navigating a new city with a paper map that was printed last year? You’d probably get lost. Now, think about using a live GPS on your phone. That’s the core difference between old-school data processing and real time data integration.

It’s all about capturing and moving information the very second it’s created. This allows businesses to react now, instead of waiting for delayed, periodic updates that are already out of date by the time they arrive.

Simply put, real time data integration is the modern way to make information available across an entire organization the moment it happens. It completely gets rid of the frustrating delays that come with traditional methods, where data is piled up in large batches and processed on a schedule—like once a day or, even worse, once a week.

Think of it as building a digital nervous system for your business. When a customer buys something, a factory sensor sends a new reading, or someone clicks a button in your app, that piece of information instantly flows to wherever it's needed most. It could be a sales dashboard, an inventory system, or a marketing platform.

But this isn't just about being fast; it's about being relevant. Data that's even a few hours old can lose its value. On the other hand, fresh, real-time data empowers you to take immediate action, personalize experiences, and run a more agile operation. At its heart, real-time data integration is about the seamless synchronization of data between all your different systems, making sure your information is always current.

This shift from delayed to real-time isn't just a technical upgrade—it’s a strategic one. The global data integration market is set to grow at a 13.8% compound annual growth rate (CAGR) by 2025, a boom largely fueled by the move to the cloud and the demand for instant analytics.

To really see why this matters, it helps to put the new real-time approach side-by-side with its older counterpart: batch processing.

The Big Idea: The real game-changer isn't just how fast data moves, but what you can do with it. Real-time integration lets you respond in the moment, turning data from a historical report card into an active, strategic tool you can use right now.

To help clarify the differences, the table below contrasts real-time and batch integration. It really shows why so many organizations are moving away from scheduled data dumps and embracing instant data flows.

As you can see, the two approaches are built for entirely different purposes. While batch processing is still useful for certain tasks, real-time integration is what gives modern businesses their competitive edge.

To really get what makes real-time data integration so powerful, it helps to pop the hood and look at the core methods that make it all happen. These aren't just abstract tech concepts; they are the engines that drive instant data flow, each with a unique way of capturing and moving information at incredible speeds.

You can think of these methods as different delivery systems for your data. Just like you'd choose an instant message over an overnight courier depending on your needs, businesses pick an integration architecture based on their goals for speed, scale, and reliability.

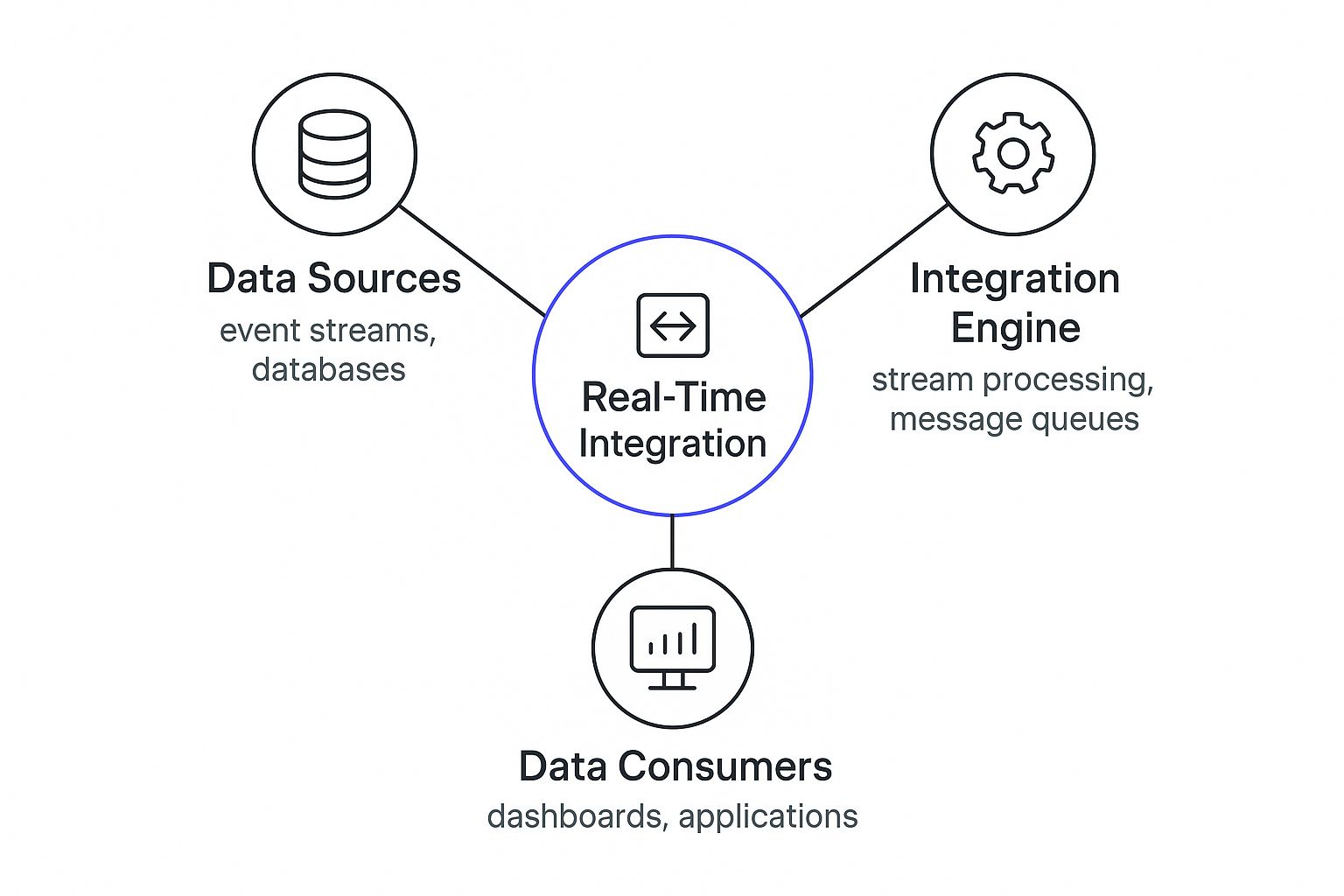

This infographic gives a great high-level view of how these pieces fit together, showing the journey from raw data to the actionable insights that business applications can use.

As you can see, the process is a continuous loop. The integration engine is the central hub, processing and routing information from sources to the systems that need it, all without delay.

One of the most effective and popular methods out there is Change Data Capture (CDC). Imagine you've hired a security guard for your database. Instead of patrolling the entire building every hour, this guard just watches the front door and instantly logs everyone who comes in or goes out. That's pretty much how CDC works.

It keeps an eye on a database's transaction logs—the official record of every single change—and captures each new insert, update, or deletion the moment it happens. This information is then streamed to other systems. Because it reads from the logs, CDC is incredibly efficient and barely touches the source database's performance.

This makes it a perfect fit for tasks like:

Another powerful architecture is event streaming. If CDC is a security guard for one building, then think of event streaming as the central nervous system for your entire organization. It relies on a central platform, like Apache Kafka or AWS Kinesis, to manage massive flows of "events" from all over the place. An event is just a record of something that happened—a website click, a new sale, a sensor reading, you name it.

These events get published to "topics" or channels on the streaming platform. From there, other applications can "subscribe" to the topics they care about and receive the data they need in milliseconds.

This approach decouples all your systems. The data source doesn't need to know who's listening, and the consumers don't need to know where the data came from. The result is a highly flexible and scalable architecture that can handle enormous amounts of data. In fact, one study found that firms mastering these automated, real-time processes achieve 97% higher profit margins than their peers.

Finally, a huge number of modern integrations run on Application Programming Interfaces (APIs) and webhooks. The easiest way to think of an API is like a waiter at a restaurant. You don't walk into the kitchen yourself; you give your order to the waiter (the API), who talks to the kitchen (the other application) and brings your food back to you.

While traditional APIs often require your system to constantly ask, "Anything new yet?" (a process called polling), modern integrations use webhooks to flip that around. With a webhook, the other application automatically sends you a notification the moment something happens. It's a "push" approach that is far more efficient for real-time data integration.

This method is ideal for connecting cloud-based software. A classic example is when a new lead in your CRM automatically creates a new contact in your email marketing tool. Each of these architectural patterns offers unique strengths, allowing you to pick the right tool for the job.

While the technical side of moving data around is interesting, the real story behind real-time data integration is all about the "why." How does this technology translate into real-world business results? The truth is, instant data access isn't just another IT project; it's a fundamental engine for growing revenue, building customer loyalty, and carving out a lasting competitive advantage.

When your data flows freely and instantly across the organization, it unlocks a much deeper understanding of your operations and your customers.

The market trends tell the same story. The global data integration market was valued at USD 15.24 billion in 2024 and is expected to explode to USD 47.60 billion by 2034. This staggering growth signals a clear global shift: businesses that get serious about instant data are the ones setting themselves up for future success.

This move toward immediate information changes everything. It flips the switch from reactive, after-the-fact problem-solving to proactive strategies that deliver real, measurable value.

One of the first places you'll feel the impact is in operational efficiency. Think about a logistics company. Instead of relying on yesterday's traffic data, what if they could see accidents and storms as they happen? By integrating real-time GPS and weather feeds, they can instantly reroute trucks, saving thousands in fuel, avoiding costly delays, and reducing wear and tear on their fleet.

The same idea applies to e-commerce. A brand launches a marketing campaign that goes viral—great news, right? But without real-time inventory updates, they can easily oversell a hot item, leading to a wave of canceled orders and unhappy customers. A live connection between their sales platform and warehouse solves this, showing accurate stock levels and even triggering automatic reorder alerts.

Real-time data integration closes the gap between an event happening and the business being able to act on it. This small window of time is where modern competitive advantages are won or lost.

Beyond just being more efficient, instant data is also a powerful tool for managing risk. In finance, you can't afford to wait for nightly reports to spot fraud. A real-time system analyzes transactions on the fly, flagging and blocking a suspicious purchase in seconds—not hours later when the money is already gone. This protects the company, secures customer accounts, and builds incredible trust.

This proactive approach completely changes the customer experience, too. By connecting all the dots—from website clicks to support calls to in-store purchases—you can craft incredibly personal interactions.

When you're building the case for this shift, remember how the benefits of cloud computing can help you get there faster. The cloud provides the flexible, scalable foundation needed to make these real-time systems a cost-effective reality for almost any business, making this powerful strategy more accessible than ever.

The theory behind real-time data integration is interesting, but its true value really clicks when you see how it solves actual business problems. Abstract ideas about data streams suddenly become concrete strategies for stopping fraud, creating unforgettable customer experiences, and keeping production lines running smoothly.

Let's look at a few mini-case studies. Each one follows a familiar story: a company faces a nagging, expensive problem, finds a real-time solution, and sees a direct, positive impact on its bottom line. This is where the rubber meets the road.

Picture a customer browsing your online store. In the old way of doing things, the marketing team might get a report the next day showing which products were popular. With real-time integration, that entire timeline collapses into a single moment.

By closing the gap between a customer's intent and their final action, the retailer turned a passive browsing session into an active, engaging sales opportunity. That’s the magic of real-time responsiveness.

In the financial world, speed isn't just a nice-to-have; it's everything. A delay of a few seconds can be the difference between a secure transaction and a major financial loss. This is where real-time data integration becomes an absolute necessity for security.

The principle is similar to what's used in cybersecurity for continuous monitoring. Financial institutions take this a step further by streaming transaction data—location, amount, merchant details—the very instant a card is swiped or a digital payment is made. This river of data flows into sophisticated fraud detection models that compare it against the customer's typical spending patterns in the blink of an eye.

If the system flags an anomaly, like a purchase in a different country, the transaction is blocked before it can be completed, and an alert is immediately sent to the customer. This proactive defense saves the industry billions each year and is a perfect example of putting instant data to work.

In manufacturing, nothing kills profitability faster than unplanned downtime. When a single machine breaks, it can bring an entire production line to a screeching halt, costing thousands of dollars for every minute of lost time. Real-time data offers a powerful way out through predictive maintenance.

The rapid adoption of these kinds of solutions shows just how valuable they are. Industries like IT, Telecom, Retail, and E-commerce are leading the way, using real-time integration to get a handle on massive, diverse data sources. The IT and telecom sector, for instance, held the largest revenue share in the data integration market in 2024. Why? Because they have an urgent need to analyze network performance, customer interactions, and operational logs the moment they happen. This allows them to boost efficiency and make critical decisions based on what’s happening right now, not what happened yesterday.

When you see these real-world applications, the strategic importance of instant data becomes crystal clear.

Jumping into a real-time data integration project without a solid plan is a lot like trying to build a house without a blueprint. You might get something standing, sure, but it won't be efficient, it won't be scalable, and it definitely won't meet your needs down the road. A successful rollout hinges on a strategic framework that guides you from the initial idea all the way through to long-term maintenance.

This isn't about getting sidetracked by the latest tech. Instead, think of this as a strategic checklist. It's about solving actual business problems, picking the right tools for your specific situation, and building a system that delivers value for years to come. By following these best practices, you can sidestep the common pitfalls and make sure your project actually delivers the ROI you're hoping for.

I've seen it time and time again: teams get excited about a new technology without first figuring out the problem it’s supposed to solve. This is the single biggest mistake you can make. Before you even think about evaluating a tool or writing a single line of code, you have to get crystal clear on the business goal.

What's the specific outcome you're after? Don't accept vague answers like "improving efficiency." Dig deeper.

When you anchor your project to a clear business objective, every decision that follows—from choosing a vendor to designing the architecture—becomes infinitely easier. This focus ensures you're building a solution that delivers measurable value, not just a technically impressive pipeline that doesn't move the needle.

The market is flooded with tools that claim to be "real-time," but they are far from equal. Your choice has to be driven by your unique requirements for speed, volume, and complexity. A startup that just needs to sync two cloud apps has entirely different needs than a global enterprise processing millions of IoT sensor events every second.

As you evaluate your options, keep these critical factors in mind:

Choosing a tool that's either too lightweight or way too complex for your needs is a surefire way to waste money and doom the project. A careful, honest evaluation upfront is one of the most critical steps you'll take.

Your data needs today are just a snapshot in time. A truly successful real-time data integration architecture has to be built with the future in mind. You have to ask yourself: What happens when our data volume doubles? What new data sources will we need to add next year? How many other teams will eventually need access to this information?

Just as important is building in solid data quality and governance from day one. When data is flying around at high speeds, even a tiny error can multiply across the system in seconds, destroying trust in the data.

Make sure you implement these practices from the very beginning:

Finally, a real-time system is never a "set it and forget it" affair. Your data pipelines are critical infrastructure, and they need constant, proactive monitoring to stay healthy and performant. You need to know what's happening with your data flows immediately, not hours later when someone pulls an outdated report.

Set up comprehensive monitoring and alerting to track key metrics like data latency, throughput, and error rates. When something goes wrong—like a pipeline slowing down or a sudden drop in data volume—your team should get an alert instantly. This vigilance is what protects the reliability and integrity of your entire real-time ecosystem.

Let's be honest, picking a tool for real-time data integration can feel like a trip to an overcrowded supermarket. Every box on the shelf promises amazing results, lightning speed, and effortless connections. To get it right, you have to look past the flashy marketing and figure out what you actually need.

This isn't just about picking a piece of software. It’s a strategic move that affects your team’s workload, your budget, and how easily you can adapt down the road. The goal is to find something that solves today's problems without boxing you in tomorrow.

Before you even think about booking a product demo, stop and map out your own needs. A tool that’s a perfect fit for a retail giant could be a complete disaster for a healthcare startup. The best way to start is by asking some fundamental questions that will immediately help you cut through the noise.

Getting this part right is everything. It stops you from getting dazzled by cool features you’ll never touch and keeps you focused on what will actually make your project work.

The best tool isn't the one with the longest feature list. It's the one that solves your specific problem with the least amount of fuss. Think of your requirements list as your compass—it will keep you pointed in the right direction.

Here’s what should be on your evaluation checklist from the get-go:

Once you have a shortlist of tools that tick your main boxes, it’s time to think long-term. This means looking beyond the sticker price to the total cost of ownership (TCO) and whether the platform can actually grow with you.

A cheap tool that needs constant hand-holding from your engineers isn't a bargain. And a platform that hits a wall when your data volumes spike will become a massive bottleneck, fast.

Keep these long-term factors in mind:

By walking through these criteria step-by-step, you can confidently filter out the noise and find a real-time data integration tool that truly works for your business, supports your team, and pays for itself over the long haul.

As more businesses explore real-time data integration, some good questions always come up. Getting these sorted out is the key to understanding why this approach is so powerful and how it’s different from the older methods we’re all used to. Let's clear up some of the common points of confusion.

Not really. It’s easy to think of it that way, but their core approaches are fundamentally different.

Think of traditional ETL (Extract, Transform, Load) as a scheduled cargo shipment. It gathers a huge batch of data, processes it all in one go, and delivers it at a set time—say, once every night. It’s predictable and works in big, chunky batches.

Real-time integration is more like a live-streaming video feed. The second something happens, that piece of information is captured and sent immediately where it needs to go. It’s a continuous, event-driven flow, not a scheduled, batch-based delivery.

Beyond getting the technology to work, a couple of "hidden" challenges often catch people by surprise. The first is managing the cost. Streaming and processing data 24/7 can get expensive, especially in the cloud, if you don't design your system carefully and keep an eye on usage.

The second challenge is often the bigger one: changing the company culture. Your teams might be used to making decisions based on reports that are a day old. Switching to information that’s only seconds old requires a huge mental shift. It forces new workflows, faster decision-making, and a focus on what's happening right now instead of just reviewing what already happened.

The real work isn't just installing the technology; it's re-engineering how your teams use information to make decisions in the moment. This cultural adaptation is where the true value of real-time operations is unlocked.

That’s a very common myth. While big companies with enormous data volumes were certainly the first to jump on board, that’s no longer the case. Thanks to modern cloud platforms and more accessible tools, real-time data integration is now a realistic option for businesses of all sizes. A startup can use it to sync customer info between its sales and marketing apps, just like a global corporation uses it to track its supply chain.

The trick is to not boil the ocean. Start with a single, high-impact problem—like personalizing a website experience or flagging a potentially fraudulent transaction—instead of trying to rebuild everything at once.

This is a critical point. If the data flying through your systems is garbage, it doesn't matter how fast it gets there. The best way to handle this is by building your quality checks directly into the data pipeline itself. This is often called "in-flight" data cleansing.

As data streams from its source to its destination, you can automatically:

By catching and fixing problems on the fly, you make sure that only clean, trustworthy data lands in your analytics tools. This is the foundation for building confidence in your real-time insights.

Ready to transform your customer management from reactive to proactive? Statisfy uses AI-driven insights to turn your customer data into actionable strategies, helping you build stronger relationships and drive renewals. See how Statisfy can streamline your customer success today.